(Note: This is the second article in a series about how we scaled Shortcut's backend. For the first post, click here.)

Measure Twice, Cut Once

As the first step toward breaking up our backend monolith, we had to address a practical problem: How would we develop on a codebase consisting of multiple applications?

As I described in the first post in this series, a monolith has the advantages of tight integration and code reuse. These advantages become constraints when it comes to factoring out separate applications from what was once a single codebase. As soon as we looked at the problem in-depth, it was obvious that new applications split off from the monolith would have to share both code and a database.

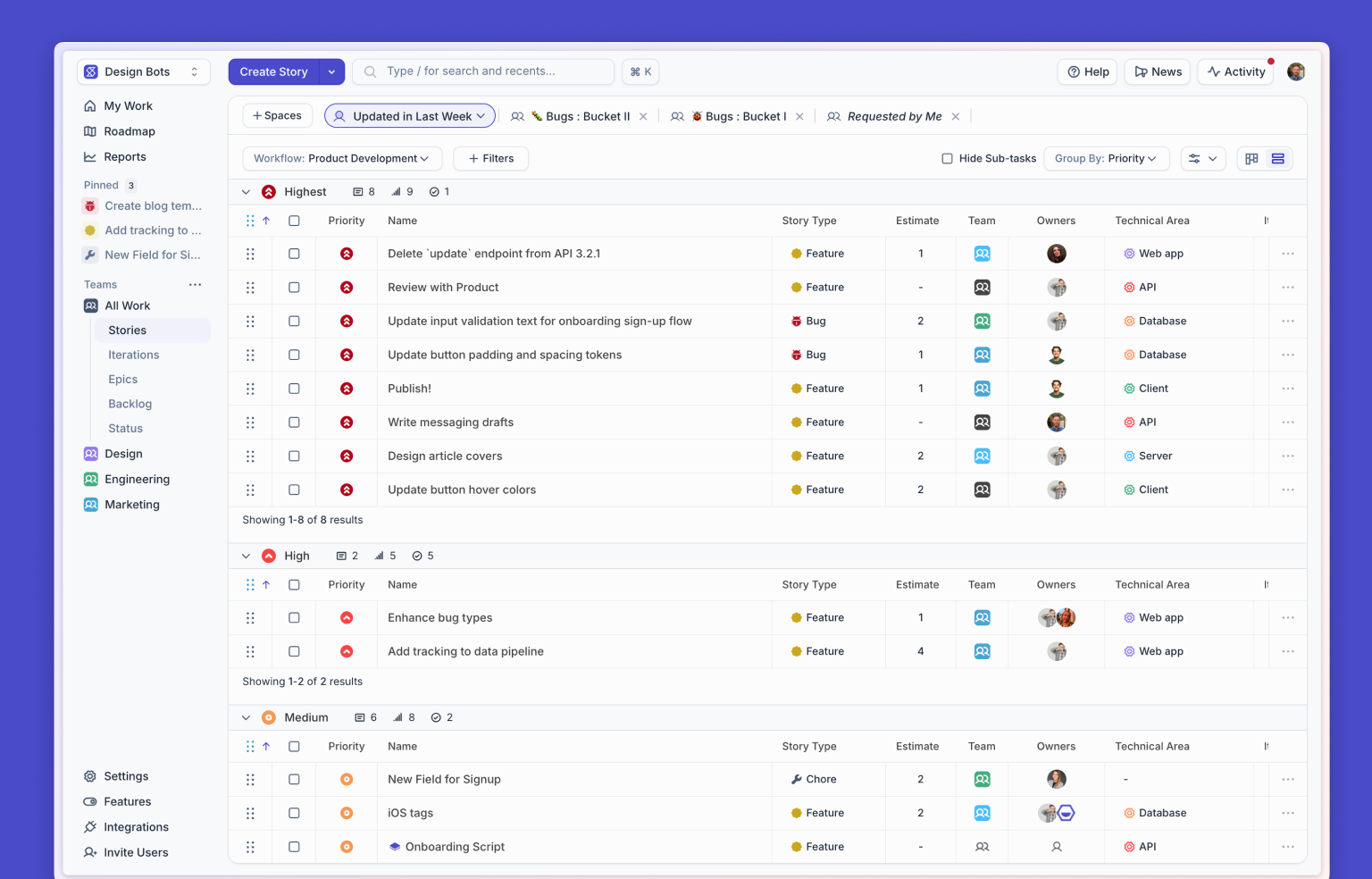

In the service-oriented architecture (SOA) world, having multiple services share one database is often considered an anti-pattern. We probably wouldn’t have chosen this design if we were starting from scratch. But we weren’t starting from scratch. The database and related code were critical to maintaining the “data model” of the product as a whole. (For example, every change to a Shortcut Story generates metadata to support Story History and the Activity Feed.) We could not simply discard this functionality, and trying to reimplement it could have quickly turned into the “big bang” rewrite we wanted to avoid.

.avif)

At the same time, we knew we couldn’t afford to pause work on new features — that’s just startup life. We would need to perform major refactoring on shared code while requirements were still changing. Maintaining duplicate versions and keeping them in sync would have been extremely tedious. To make the refactoring feasible, we needed a single working copy of every piece of code, even as that code moved from one application to another. Our monolith needed to become a mono-repo.

Repo, REPL, refactor!

Since we were working in Clojure, we were used to a REPL-driven development style, interacting with a running program and applying changes without restarts. We wanted to preserve this workflow even as we worked across applications in our mono-repo. Existing build tools didn’t quite solve for this use case, so we decided to roll our own solution. We didn’t want to invent a new build tool from scratch, but we were able to get the workflow we wanted by combining several well-known technologies.

In a JVM-based environment, including Clojure, the first job of any build tool is to assemble a classpath. The Java Classpath, a simple list of directories and files from which to load code, is an under-appreciated feature of the JVM. Although far from perfect, it avoids many of the filesystem conflicts that plague other language runtimes.

For interactive development in Clojure, we wanted all our source code on the classpath, emulating the development workflow we had enjoyed with the monolith. For deployment, however, we wanted each application packaged with just its own source code and its dependencies. Smaller artifacts make for faster deployments and reduce the risk of unexpected conflicts in production code.

.avif)

To achieve this, we defined our own concept of a module, basically just a directory in our backend mono-repo. Some modules are deployable applications, others just shared libraries. Each module has a configuration file, called clubhouse-module.edn, that specifies its dependencies on external libraries and other modules in the mono-repo.

We were already using Leiningen ( lein ), a popular Clojure build tool, to manage external dependencies. We wrote a small Clojure program called module-helper that reads clubhouse-module.edn and generates project.clj, the configuration used by Leiningen. The generated project.clj includes source paths of the current module and other modules it depends on.

module-helper was easy to write because our clubhouse-module.edn and Leiningen’s project.clj are both formatted as literal Clojure data structures, also known as EDN. Transforming one EDN data structure into another is what Clojure does best. (This is one benefit of homoiconicity in a programming language.)

To keep those generated files up-to-date, we tapped another old, under-appreciated technology, the humble Makefile. Tying it all together, a launcher script (in Bash) calls make to ensure all the configuration files are up-to-date, then uses the generated classpath to launch a Clojure REPL (see diagram). We can launch a REPL isolated to a single module or include code from all modules at once. Since all the intermediate steps are cached in files, the make step takes almost no time after the first build.

.avif)

This may look complicated on the surface, because there are a lot of moving parts, but each part is simple: read data in, write data out. It only took a day to bang out the first version of module-helper, with occasional fixes and improvements after that.

Working with old, established tools like GNU make has an upside: Whenever we needed more complex behavior, we found that make already had a well-documented solution. For example, dependencies between our modules are not known until after running module-helper, so our original Makefile didn’t always know when a project.clj needed rebuilding. Then we learned about Automatic Prerequisites. All we had to do was extend module-helper to generate a file of dependencies between modules. That file ( module-deps.mk ) is incorporated into the make dependency graph on subsequent runs.

Data In, Data Out

We've been using this repository and build structure for a couple of years now, and I think I can safely call it a success. It enabled us to introduce new module boundaries just by moving code into different directories, without breaking anything and without disrupting ongoing feature work.

The pattern was straightforward enough that our devops specialist successfully copied it for our deployment tooling, even though he was new to Clojure at the time.

Build tooling doesn't have to be complicated. All the relevant problems have been solved a dozen times or more. Many build tools, including Leiningen, have a plug-in architecture for custom extensions, but we just used its built-in capabilities. Thinking of a tool as a simple data transformer, rather than an API to program within, frees you to think about higher-level abstractions, such as our “modules.” As the saying goes, every problem can be solved by adding another layer of indirection.

Coming up next: Now that we have a story for developing across modules, it's time to make the first real cut to the monolith.

<!-- Code for CTA component starts here-->

<div class="post_body_cta"><div class="post_body_cta-heading">

Build what’s next

</div><div class="post_body_cta-text">

Experience the most lightweight, enjoyable way for your team to work.

</div><div class="common_buttons_wrap"><a href="/signup" class="button primary text-color-white w-button">Get Started - free forever</a>

<a href="https://api.app.shortcut.com/oauth/signup" class="button is-google w-button">Sign up with Google</a></div></div>

<!-- Code for CTA component ends here-->

%20(788%20x%20492%20px)%20(1).png)

.png)